Monitoring Model Drift: Early-Warning Signs of AI Failure Every Ops Leader Should Track

AI has revolutionized business decision-making, but AI failure often creeps in not with a loud crash, but a quiet decay. Scientific Reports notes that 91% of ML models degrade over time, a stunning statistic that should grab every tech leader’s attention. In other words, the majority of today’s AI models that perform well initially will stop working as intended in days or weeks. [Source]

Why does this happen? The answer is simple: things change.

The data being put into a model in production is different from the data it was trained on in real-time scenarios. This phenomenon, known as model drift, is the biggest culprit behind many AI implementation failures.

For instance, in the healthcare industry, every AI and machine learning (ML) model is unique, and the data being processed can vary across different departments. Although a model may deliver perfect results during production, it can experience drift when handling real-time patient data that includes variations in age, race, gender, location, and medical conditions.

In such cases, even a slight variation in the model’s output could have significant consequences. Therefore, continuous monitoring of model performance is essential and should be regarded as a requirement rather than just a strategic option.

What Is Model Drift and Data Drift?: Understanding the Silent AI Performance Killer

1. ML Model Drift:

One of the least discussed yet most important issues in AI/ML deployment is model drift. Simply put, model drift (also known as model decay or model degradation) refers to the degradation of a machine learning model’s performance over time. A model that was hitting high accuracy last month might slowly start making errors as time goes on – effectively a slow march toward AI failure if left unchecked.

This degradation is so common that industry leaders call it “AI aging,” a term for the temporal quality degradation of AI models as they get older. Essentially, model drift is the manifestation of AI model failure over time. The model gradually or sometimes abruptly fails to generalize to new data as it once did.

2. Data Drift:

Data drift refers to changes in the input data’s statistical distribution or features compared to what the model saw during training. For example, imagine an AI model trained on customer behavior data from summer, when winter comes, customer patterns shift, and the input data no longer “looks like” the training set.

3. Concept Drift:

Concept drift is a change in the relationship between inputs and the target outcome. For instance, if the definition of a “fraudulent transaction” evolves over time, the model’s concept of fraud becomes outdated. Henceforth, the initial model needs a revision or a new definition of a fraudulent transaction.

It’s important to distinguish these terms. Data drift is about the world’s data changing, while model drift is about the model’s predictive performance changing as a result of that data change. In practice, any AI implementation failure that happens after deployment often ties back to one or both of these drifts.

Why Do AI Models Drift? 5 Top Reasons Behind AI/ML Model Failures

Why do even well-trained AI models fail over time? The fundamental reason is that the world is non-stationary and data sources keeps on changing every minute. But let’s break down the specific drivers of model drift. Recognizing these causes will help you anticipate and mitigate AI model failure before it happens:

1. Changing Real-World Conditions (Data Drift):

Over time, real-world data evolves. Customer preferences shift, economic conditions fluctuate, new slang or jargon appear, sensor equipment gets updated, you name it. When the input data distribution shifts, the model is seeing something unfamiliar.

For example, a recommendation system trained on last year’s user behavior may start faltering this year because user tastes changed. If the model’s environment changes faster than its training data can keep up, AI failure is looming.

2. Adversarial Changes:

In some cases, people or systems deliberately change behavior to mislead an AI. For example, if fraudsters learn the patterns an AI uses to detect fraud, they will adapt their tactics. The AI’s performance will drift as the bad actors stay a step ahead. These adversarial changes lead to ML model failure unless the model is updated to new tactics.

3. Use Case or Context Shift:

An AI model that performs well in one healthcare setting can fail in another. This phenomenon is known as context drift. Imagine training an AI model using patient data from a large urban hospital with cutting-edge diagnostic equipment and diverse demographics. If that same model is deployed in a rural clinic with different technologies, patient behaviors, or disease prevalence, its performance could significantly degrade. The differences in context cause AI implementation failure because the model’s assumptions no longer hold.

Learn more about AI in healthcare.

4. Temporal Effects and Seasonality:

Time sensitivity is a major factor leading to AI failure in recent times. Some models simply become stale as time passes, even if nothing obvious changes in the data. There could be hidden seasonal effects or gradual trends. A retail sales predictor might slowly drift off-target if it wasn’t trained to recognize that every holiday season, patterns change. If a model isn’t regularly refreshed, model decay is inevitable due to the relentless march of time.

5. AI Aging:

Sometimes, an AI model doesn’t fail because the data has changed, but because the model itself naturally loses accuracy over time. Even if nothing major shifts in the data, small errors can build up, hidden biases in the original training can grow stronger, or random quirks in how the model learned can start to show up later.

In other words, an AI model might appear fine and then suddenly hit a cliff of failure. This intrinsic aging effect is a critical contributor to AI failure that can be hard to predict without careful monitoring.

The Impact of Model Drift on AI Success and ROI

Model drift doesn’t just cause technical headaches; it has real business consequences. When left unmanaged, drift can turn a successful pilot into an AI implementation failure, eroding the ROI that justified the project in the first place. Let’s examine the impacts of unchecked model drift on AI performance and the bottom line:

1. Quiet Failures, Big Consequences:

AI that keeps running but loses accuracy, especially in high-stakes areas like healthcare or fraud detection, can do more harm than good.

2. Loss of Trust:

When forecasts fail, leaders lose trust. One bad drift story can derail future AI adoption across an entire organization.

3. Financial Fallout:

From bad trades to wasted ad spend, drifted models and AI failures bleed money, and while they underperform, you miss out on growth opportunities.

4. Skyrocketing Maintenance No monitoring?

Expect emergency repairs, rushed retraining, and rising costs as unchecked drift snowballs into bigger issues.

5. Legal & Ethical Pitfalls

In industries like finance or healthcare, unnoticed bias or errors caused by drift can lead to regulatory trouble and brand damage.

In summary, model drift can quietly convert an AI success into an AI failure story. It’s like a leak in a ship – if not patched, eventually the ship sinks. High-performing AI requires continuous vigilance.

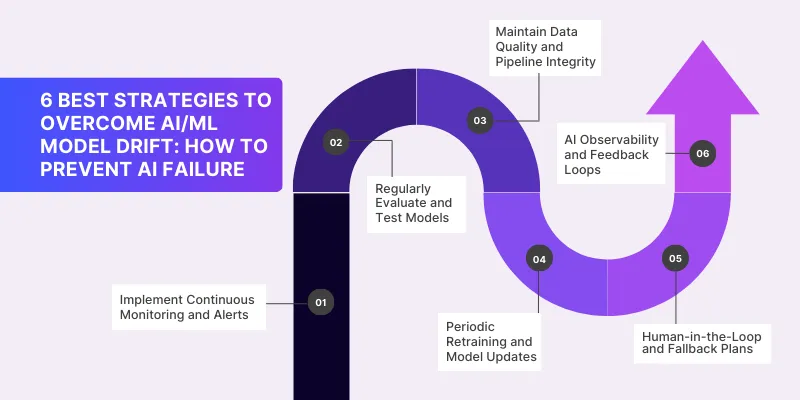

6 Best Strategies to Overcome AI/ML Model Drift: How To Prevent AI Failure

While model drift and AI aging are unavoidable, the good news is they are manageable. Here are several proven strategies to overcome model drift and prevent AI model failure in production:

1. Implement Continuous Monitoring and Alerts:

You can’t fix what you don’t see. Establish a robust model monitoring system that tracks performance metrics and data drift metrics in real-time. Set up automated alerts to flag when metrics deviate beyond acceptable thresholds. The alerts should be flexible and prioritized. You don’t want to overwhelm the team with every minor change, but you do want immediate notification of significant drops in performance or spikes in data drift.

Modern MLOps platforms and AI observability tools can monitor data drift, model outputs, data integrity, and even traffic patterns.

2. Regularly Evaluate and Test Models (AI Performance Checkups):

Just like you schedule maintenance for equipment, schedule periodic evaluations for your models. For example, recalculate performance on a recent sample of data to see if there is any degradation monthly. Additionally, perform validation tests to detect bias or other issues that may cause concept drift in the target or fairness aspects.

If you have the capacity, run shadow deployments or A/B tests with a newer model version to see if the current production model is lagging. Proactive testing can reveal drift before it affects users.

3. Maintain Data Quality and Pipeline Integrity:

Many AI failures blamed on model drift are actually due to data quality issues. Ensure your data pipelines are robust, monitor for missing or corrupted data, and validate that upstream changes haven’t introduced inconsistencies. A sudden model performance drop might be fixed by cleaning a pipeline issue rather than retraining the model. It’s wise to implement anomaly detection on the input data itself.

Additionally, continuously perform EDA (Exploratory Data Analysis) on new data to spot emerging anomalies or shifts. A well-disciplined data engineering practice will prevent a lot of drift caused by garbage-in.

Remember, garbage data leads to model drift leads to AI failure.

4. Periodic Retraining and Model Updates:

This is the most direct countermeasure to model drift: retrain your model regularly with fresh data. The cadence can vary – some teams retrain weekly, others monthly, some whenever performance drops by a certain amount. The Scientific Reports study emphasizes that models do not remain static and will need updates. When retraining, consider strategies like:

5. Human-in-the-Loop and Fallback Plans:

For critical systems, always have a fallback for when the AI is uncertain or likely drifting. This might mean routing cases to human experts when the model’s confidence is low or when an anomaly is detected. A human-in-the-loop approach can catch AI failures before they propagate. Also plan for graceful degradation: if a model is detected to have drifted significantly, what’s the fail-safe? Perhaps revert to a simpler older model or rules engine until it’s fixed.

6. AI Observability and Feedback Loops:

Treat your models as living systems that produce telemetry. Embrace AI observability practices: track not just predictions and accuracy, but also input data profiles, latency, data integrity, etc., in comprehensive dashboards. When an issue is spotted, perform root cause analysis to diagnose whether it’s data drift, concept drift, or something else. Importantly, close the feedback loop by feeding the insights back to model improvement.

For example, if you discover the model fails on a new segment of data, gather those failure cases and include them in the next training set. This constant loop of monitor->detect->diagnose->retrain->redeploy is at the heart of effective MLOps.

By implementing these strategies, organizations can dramatically reduce the risk of AI model failures in production. Instead of reacting to failure, you’ll be preventing it or catching it at an early stage. Remember, model drift is natural, but letting it turn into AI failure is not.

With diligent monitoring, timely retraining, and strong data engineering practices, your AI systems can deliver sustained value. Think of it as a continuous improvement process.

Data Engineering & EDA: Amzur’s Approach to Keeping AI Implementation and Success on Track

Even the best AI models can go off track if the data behind them isn’t solid. That’s why Amzur puts a strong focus on data engineering and Exploratory Data Analysis (EDA). EDA helps teams truly understand their data before building or training a model.

But it’s not just a one-time task; EDA is a continuous practice. By regularly checking data for issues, trends, or shifts, we catch problems early, before they hurt model performance.

With reliable, well-prepared data, models stay accurate longer and are less likely to drift. Think of EDA as an early warning system: it tells us when the data is changing, so we can take action before things go wrong.

Amzur’s Data Engineering Best Practices:

Our data engineering services encompass setting up robust data pipelines, implementing data governance, and automating the monitoring of data flows. We ensure that as your business grows and data evolves, the pipeline transforms and validates data in a consistent way.

For example, we build checks that automatically flag if a data source’s schema changed or if a key feature suddenly has unusual missing values. These data checks are complemented by EDA reports that periodically summarize how the live data compares to historical training data. By integrating these practices, we help businesses maintain a single source of truth and high data integrity.

Don’t Let AI Failure Drain Your Budgets & Efforts:

In the fast-evolving landscape of AI, model drift is the quiet foe that every ops leader must learn to defeat. It is the leading cause behind many silent AI failures, yet with awareness and action, it can be managed.

The message is clear: AI implementation failure is not inevitable if you proactively monitor and maintain your models. Treat your models as living products that need care, not disposable one-off code. By tracking the early warning signals of model drift and responding decisively, you can ensure your AI projects deliver sustained success and ROI.

For organizations that invest in these practices, AI becomes not just a buzzword but a reliable engine of innovation. The difference will show in the bottom line and in your ability to trust your AI.

Connect with Amzur AI experts to get a detailed 360-degree understanding of your AI investments.

Frequently Asked Questions

What is model drift in AI, and why should I care?

Model drift refers to the degradation of an AI/ML model’s performance over time as real-world data or conditions change. You should care because model drift is extremely common – studies show over 90% of models experience it.

How do I identify early signs of AI model failure due to drift?

Early signs include declining accuracy or KPI metrics, changes in the distribution of model outputs, and data drift in input features (the incoming data no longer resembles training data). Setting up monitoring for performance metrics and data statistics is key.

What can we do to prevent AI/ML model failures from drift?

To prevent failures, implement continuous model monitoring to catch drift early and routine retraining of models on fresh data. Establish alerts for when performance drops below a threshold. Maintain good data engineering practices (ensuring data quality and stable pipelines).

What is AI aging and how is it different from model drift?

AI aging is a term for the overall phenomenon of model performance degrading as time passes since the last training. It’s essentially model drift viewed as a function of time. AI aging encompasses all reasons a model might decline over time, even in stable environments.

Director ATG & AI Practice