Mastering AI Projects with a 6-Step AI Implementation Framework

With over 2 decades of industry experience, we’ve witnessed that AI success requires more than cutting-edge algorithms and sophisticated tools – it demands a structured end-to-end approach. We also found that 80% of our CxO friends are struggling with the lack of a clear AI implementation framework, regardless of their readiness and budget allocation. Furthermore, they have enough budgets to spend but are uncertain about where and how to invest.

In fact, a 2024 BCG survey found that 74% of companies have yet to see tangible value from their AI investments. Similarly, Gartner research indicates that 85% of AI projects fail due to unclear objectives, and a staggering 87% never even reach production, often yielding little to no impact [neurons-lab.com].

These shocking statistics underline the need for a robust AI implementation framework to bridge the gap between concept and real-world impact.

This article introduces Amzur’s six-step AI implementation framework, developed through years of hands-on experience, to ensure AI initiatives deliver on their promise. We’ll walk through each stage, from initial discovery to ongoing maintenance, explaining in detail how this structured AI framework drives success.

By following this proven approach, you can significantly benefit from AI investments, avoid analysis paralysis, and turn AI implementation ideas into tangible business value.

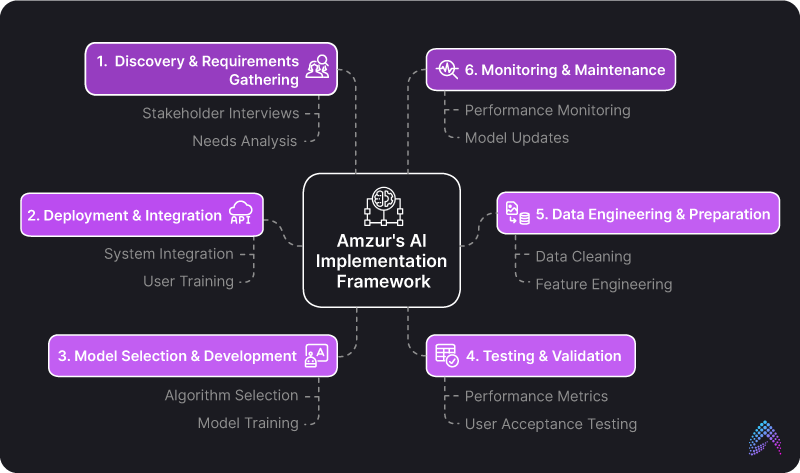

Amzur's AI Implementation Framework

Step 1: Discovery & Requirements Gathering

Every successful AI journey begins with a deep discovery phase. In our AI implementation framework, the first step is to clearly define objectives and success metrics. We collaborate with stakeholders to identify the business challenges to solve and what a successful outcome looks like. This involves analyzing existing workflows and data sources to ensure any AI solution aligns with your operations.

Unclear goals are the top reason AI projects fail – Gartner notes that 85% of AI projects fail due to unclear objectives and poor project management. That’s why we put heavy emphasis on this step.

We conduct workshops with your business and IT teams to ask the right questions: Which problems are we solving? How will we measure success? By establishing concrete success metrics (whether it’s reducing churn by X% or speeding up process Y by Z hours), we create a north star for the project.

This clarity at the outset builds a strong foundation of trust and alignment. Everyone from the CIO to the engineering team gains confidence that the AI initiative is tied to real business value. In short, Discovery is about ensuring the AI project is solving the right problem with stakeholder buy-in before a line of code is written.

Step 2: Data Engineering & Preparation

No AI implementation framework can succeed without quality data. Data is the fuel for AI implementation and success, and here we make sure it’s high-octane. In this step, our team delves into data engineering and preparation. We collect data from every nook and corner, including relevant internal and external sources. We gather structured and unstructured data and clean it to transform it into a usable format.

It’s often said that data scientists spend 80% of their time cleaning data because better data beats fancier algorithms. We find this true in practice – careful data preparation prevents the classic “garbage in, garbage out” scenario. This means handling missing or corrupt values, standardizing formats, and ensuring the data truly represents the problem space. We also perform Exploratory Data Analysis (EDA) for insights. During EDA, our experts sift through the data to uncover patterns, correlations, and outliers.

Equally important, we often gain new business insights from the EDA process – insights that can refine the problem statement or suggest quick wins. In this step, we establish a robust data foundation that helps prevent biases in AI implementation.

Step 3: Model Selection & Development

With objectives clear and quality data in hand, our AI implementation framework moves into model selection and development. This is where we turn concept into creation. Based on the problem requirements and data characteristics, we choose the appropriate modeling approach. Importantly, we don’t chase the flashiest algorithm for its own sake – we aim for the best AI framework and model that fits the use case.

For some projects, a straightforward machine learning model (like a regression or decision tree) might be ideal; for others, a state-of-the-art deep learning model or a fine-tuned transformer might be warranted. We weigh factors such as accuracy needs, interpretability, latency requirements, and the volume of data to determine the right model.

Once the model type is selected, our AI engineers develop or fine-tune the model in an iterative, agile manner. We often start by building a proof-of-concept model to validate the approach quickly. This might involve leveraging pre-trained models or well-established AI frameworks to accelerate development. Using these AI frameworks and libraries ensures we’re standing on the shoulders of proven technology while custom-crafting the solution to your data.

Throughout development, we maintain rigorous version control and documentation, treating models as critical code assets. We also keep the business context in focus: for example, if model explainability is important for stakeholder trust or regulatory compliance, we might opt for a simpler algorithm or use techniques to make a complex model’s decisions more transparent.

By the end of this phase, we have a working AI model (or set of models) that meets the defined objectives on our test data.

Here is our practical guide to AI model selection for tech and business leaders

Step 4: Testing & Validation

Even the smartest model is only as good as its validation. In our 6-step AI implementation framework, Testing & Validation is a critical checkpoint before any deployment. Here, we rigorously test the model against data it hasn’t seen to ensure it generalizes well and delivers the expected outcomes. This process starts with holding out a portion of data during the training phase for testing, as well as using cross-validation techniques to check consistency across different data subsets.

We examine key performance metrics (accuracy, precision/recall, F1-score, etc., depending on the project) to verify whether the model meets or exceeds the success criteria defined back in Step 1. If it doesn’t, this phase sends us back to refine the model or even reconsider data features – that’s the value of an iterative framework.

However, validation in our AI framework goes beyond just metrics. We conduct scenario testing and edge-case analysis: how does the model perform on atypical inputs or in extreme conditions?

For instance, if we built a computer vision model, we’d test images in low lighting or with unusual angles. If it’s a predictive analytics model, we check how stable its predictions are when certain variables spike or drop. We also incorporate A/B testing and user feedback when applicable.

This thorough validation step ensures we’re not deploying a “black box” and hoping for the best; we’re deploying a vetted and valid solution that we know will perform and provide value in the real world.

Learn more about the role of DevOps and AI in modern testing.

Step 5: Deployment & Integration

Now comes the moment of truth in the AI implementation framework – Deployment & Integration. This is where we deploy the validated model into production, making it accessible and useful to end-users or other systems. It’s a step where many AI projects stumble: a model might work in the lab but never make it into the business workflow.

Amzur tackles deployment in a planned, DevOps-like fashion. From the project’s start, we consider how the model will integrate with your existing IT ecosystem, whether it’s your CRM, ERP, mobile app, or IoT devices.

By the time we reach this stage, we have a clear deployment plan: which infrastructure will host the model (cloud or on-premises), how it will interface with other software (e.g. via RESTful APIs or embedded libraries), and what throughput or latency is required for the application to be successful.

We package the AI model using modern best practices – often containerizing it with tools like Docker or using cloud ML deployment services – to ensure scalability and reliability. Our engineers work closely with your IT team to integrate the AI solution smoothly. This might involve setting up data pipelines so that new data flows to the model in real-time, or integrating the model’s outputs into a user-friendly dashboard for business users.

Role of Docker Containerization in CI/CD Pipeline security.

We also implement necessary authentication, security, and compliance checks at this stage, so the AI system meets enterprise security standards and regulatory requirements. Deployment isn’t just a technical drop-off; it’s a holistic change management effort.

We provide training sessions or documentation to the end-users and IT staff on how to use and support the new AI-driven system. By making deployment a first-class citizen in the AI framework (rather than an afterthought), we ensure the brilliant model developed in Step 3 actually sees the light of day. At the end of Step 5, your AI solution is live, integrated, and delivering value within your operations – this is where AI starts paying dividends.

Step 6: Monitoring & Maintenance

The final step of our AI implementation framework distinguishes us as a one-off experiment from a lasting success: Monitoring & Maintenance. AI projects do not end at deployment – in fact, that’s where the real journey begins. Once the model is in production, we continuously monitor its performance against the defined success metrics and KPIs. This involves tracking predictions and outcomes over time and setting up alerts or dashboards for key performance indicators.

For example, if we deploy a customer churn prediction model, we monitor how accurate those predictions are month over month. If accuracy starts to drift downward, that’s a signal something has changed – perhaps consumer behavior has shifted or new competitors have emerged – and the model may need attention.

We also watch for data drift and model drift – situations where the input data or underlying patterns evolve away from what the model was trained on. When such changes are detected, our team proactively plans for model updates. Maintenance can include periodically retraining the model with fresh data, fine-tuning it, or even selecting a new model architecture if necessary.

The framework ensures we schedule these check-ins (for instance, quarterly model refreshes or automated retraining if performance dips below a threshold). Another critical aspect of monitoring is gathering user feedback in production. Users might discover new use cases or edge cases, and we feed that information back into improving the system.

We view AI as a living product – much like software gets version updates, your AI models get continuous improvement through maintenance cycles.

Moreover, Amzur’s team remains a partner in this phase, providing ongoing support and updates. We help audit the model’s decisions for fairness or errors over time, ensuring it continues to meet ethical and regulatory standards as they evolve. This step builds tremendous trust with our clients – they know that adopting AI isn’t a one-and-done deal, but a long-term strategic capability with Amzur by their side.

By including Monitoring & Maintenance in the AI framework, we ensure your AI solution keeps delivering value in the long run and adapts to new challenges. It’s a safeguard that the investment made in AI continues to yield returns and doesn’t fade into irrelevance after a few months.

Conclusion

In today’s competitive landscape, adopting AI can be transformative – but only if done with a comprehensive plan. Amzur’s 6-step AI implementation framework is a proven blueprint that covers the entire AI project lifecycle, from concept to creation and beyond.

Each step in this AI implementation framework builds on the previous, ensuring nothing is left to chance: we align AI strategy with your business goals, build on a solid data foundation, develop the right models, test them rigorously, deploy effectively, and maintain them for continuous improvement. This holistic approach embodies what the best AI frameworks in the industry emphasize – a balance of technical excellence, strategic alignment, and human oversight.

We stand ready as your AI implementation partner to guide you through each step, helping turn your AI concepts into reality and ensuring those innovations deliver lasting value. Trust Amzur to lead your AI journey from first idea to full-fledged success.

Frequently Asked Questions

How do you ensure data quality in AI projects?

Data quality is ensured by cleaning and transforming data, performing Exploratory Data Analysis (EDA), and addressing missing or inconsistent values. This solid foundation helps the AI model make accurate predictions.

What challenges do businesses face in AI implementation?

Businesses often face unclear objectives, poor data quality, and integration issues with existing systems. A structured AI framework helps mitigate these risks by aligning AI with business goals and ensuring rigorous testing.

How does model selection impact AI project outcomes?

Choosing the right model ensures it fits the business problem and data characteristics, directly affecting accuracy, interpretability, and overall performance. Proper model selection minimizes risks and optimizes results.

Why is ongoing monitoring and maintenance crucial for AI systems?

AI systems must be continuously monitored to adapt to evolving data and market conditions. Amzur’s AI framework includes regular model checks, performance tracking, and updates to prevent model drift and ensure long-term value.

Director ATG & AI Practice