Why AI Ethics is the Future of Healthcare: Beyond Compliance

AI Ethics In Healthcare: Say No To Bias & Inefficiency

The healthcare industry is undergoing a seismic shift as Artificial Intelligence (AI) takes center stage in transforming care delivery. From streamlining tedious administrative tasks to diagnosing diseases with precision, AI holds the promise of revolutionizing the way healthcare operates. But this rapid innovation isn’t without its pitfalls. This is where AI Ethics in healthcare comes into play to avoid AI biases in the healthcare industry.

While regulations like HIPAA and FDA guidelines provide a foundational framework to safeguard patient data and ensure system safety, they merely scratch the surface. Ethical AI in healthcare goes beyond compliance, ensuring systems are not just legally sound but also fair, transparent, and aligned with the core values of medical and patient care.

This article explores why meeting regulatory compliance alone isn’t enough, and AI Ethics are crucial to earn trust. Let’s explore how AI and healthcare organizations grow together.

Key Takeaways

While regulations like HIPAA and FDA guidelines set minimum safety and data protection standards, they often overlook ethical concerns such as algorithmic bias, fairness, and patient trust in AI-driven healthcare systems.

Compliance ensures legal accountability, while AI ethics focuses on fairness, transparency, and accountability in decision-making. Organizations must align both to create AI systems that are not just legal but also equitable and trustworthy.

Ethical AI fosters patient trust by ensuring transparency, fairness, and accountability. Patients are more likely to embrace AI-driven care when they understand how decisions are made and feel confident that the technology is free from bias.

Critical issues like data privacy, algorithmic bias, and security vulnerabilities can be mitigated through strategies like robust encryption, diverse and representative data training, and leveraging technologies like blockchain for transparent data management.

Ethical AI in healthcare is not a one-time implementation but an ongoing process. Organizations must continuously monitor, evaluate, and adapt AI strategies to meet evolving ethical standards, technological advancements, and regulatory changes.

Organizations prioritizing AI ethics strategies will lead the healthcare industry by fostering patient-centric care, improving clinical decision-making, and staying ahead of evolving compliance requirements and public expectations.

Why Regulatory Compliance Isn't Enough in Healthcare

Regulations such as HIPAA and FDA guidelines provide essential safeguards for data protection, device safety, and accountability. However, they only establish the base-level standards for legal operation, often falling short in addressing the AI ethical issues.

1. Reactive Nature of Regulations:

Compliance frameworks respond to known risks and past incidents, leaving emerging challenges like algorithmic bias or evolving cybersecurity threats unaddressed.2. Process-Oriented Over Outcome-Focused

Regulatory adherence ensures protocols are in place but doesn’t guarantee equitable, patient-centered outcomes.Real-World Example: Algorithmic Bias in Diagnostics

In 2020, a study highlighted a case concerning algorithmic bias in a healthcare AI tool designed to allocate medical resources. While compliant with all regulations, the system disproportionately favored white patients over black patients with equivalent medical needs. This discrepancy stemmed from the algorithm’s reliance on healthcare costs as a proxy for patient need, perpetuating existing disparities in access to care.

The Ethical Response:

The algorithm was revised to prioritize broader data points beyond costs, shifting the focus to actual medical needs. This proactive change was driven not by compliance mandates but by an ethical obligation to promote equity. [Source]

Why a Compliance-First Mindset is Risky

A compliance-first mindset often prioritizes immediate regulatory approval over long-term ethical considerations. This approach may help organizations launch AI systems quickly, but it can also lead to:

Reputational Damage

Ethical lapses, such as biased algorithms, can erode trust among patients and providers.

Legal Risks

As regulations evolve, systems that once complied with standards may later be found in violation.

Operational Inefficiencies

Addressing ethical issues retroactively is often more costly and time-consuming than incorporating them upfront.

Why Ethical AI Matters Beyond Compliance

The integration of artificial intelligence (AI) in healthcare raises significant ethical considerations. Key issues include ensuring data privacy and security, addressing potential biases in AI algorithms, and maintaining patient autonomy along with informed consent. Healthcare professionals may need to adjust their responsibilities as AI technologies evolve. Additionally, establishing regulations and guidelines is crucial to ensure that AI in healthcare transformation remains ethical and responsible.

1. Building Patient Trust

Patients demand more than regulatory assurances. Transparency, fairness, and accountability are non-negotiables in fostering trust. For instance, a parent relying on an AI diagnostic tool for their child expects decisions to be accurate, unbiased, and comprehensible—not just compliant with technical standards.2. Promoting Fairness and Equity

Regulatory frameworks often overlook systemic inequities. AI Ethics ensures that populations historically marginalized in healthcare receive equitable treatment, closing gaps in diagnostic accuracy and resource allocation.

3. Mitigating Long-Term Risks

While compliance addresses immediate risks, ethical AI anticipates future challenges, such as biases in new datasets or vulnerabilities in evolving cyber threats. A proactive approach ensures adaptability and resilience.4. Enhancing Organizational Reputation

Ethical AI in healthcare distinguishes organizations as leaders in responsible innovation. This fosters stakeholders’ trust, attracts partnerships, and enhances brand loyalty in a competitive market.

Bridging the Gap Between Compliance and AI Ethics

What Compliance Covers

Compliance ensures adherence to laws, such as protecting patient data under HIPAA or verifying an algorithm’s safety per FDA guidelines. These frameworks reduce risks and establish accountability but don’t address ethical complexities like fairness, bias, or trust.

What Ethics Brings to the Table

Ethics focuses on ensuring that AI systems:Promote Fairness

Minimize bias and ensure equal treatment across demographic groups.

Enable Transparency

Make AI decision-making processes explainable to providers and patients.

Ensure Accountability

Establish clear ownership for outcomes, preventing errors from going unaddressed.

The gap between compliance and AI ethics often emerges because regulations lag behind technological advancements. While compliance ensures AI systems meet minimum standards, ethics ensures these systems benefit everyone they touch.

How to Go Beyond Healthcare Compliance

1. Develop Ethical Frameworks

Establish a proactive AI ethics framework to incorporate fairness, transparency, and inclusivity. Ensure that these principles are revisited regularly to align with technological advancements.

2. Engage Stakeholders

Collaborate with patients, clinicians, ethicists, and regulators to identify and address gaps in compliance. A multidisciplinary approach ensures comprehensive oversight.3. Foster Transparency

Prioritize explainable AI tools and communicate decision-making processes clearly to end-users. Transparency builds trust among patients and healthcare providers.4. Continuous Auditing and Improvement

Go beyond regulatory audits by conducting independent ethical assessments of AI tools and their real-world impact.Challenges Posed by AI in Healthcare and Their Solutions

1. Data Privacy and Security

AI in healthcare systems relies on large volumes of patient data to deliver accurate predictions and insights. This reliance raises concerns about data breaches, unauthorized access, and misuse of sensitive information.

Solution:

Healthcare organizations must prioritize robust encryption techniques and implement decentralized data storage solutions. Blockchain technology can provide an immutable record of data transactions, enhancing transparency and security. Regular audits and compliance with global standards such as GDPR and HIPAA are essential to ensuring data protection.

2. Algorithmic Bias and Inequality

AI systems trained on biased or incomplete datasets can produce discriminatory outcomes. For example, algorithms trained primarily on data from one demographic may underperform or misdiagnose for underrepresented groups, exacerbating healthcare disparities.

Solution:

To mitigate bias, developers should use diverse and representative datasets during training. Regular audits and external reviews of AI systems should be conducted to detect and rectify biases. Including diverse stakeholders in the development process, such as clinicians, ethicists, and patient advocacy groups, ensures fairness in outcomes.

Download our white paper to learn more about strategies to overcome AI bias in healthcare.

3. Lack of Transparency and Explainability

Many AI models, especially those using deep learning, function as “black boxes,” making it difficult to understand how decisions are made. This opacity can erode trust among healthcare providers and patients.Solution:

The adoption of Explainable AI (XAI) techniques can address this challenge. XAI provides insights into how and why decisions are made, enabling clinicians to validate AI outputs. Regulatory bodies should also mandate transparency, ensuring that algorithms used in clinical care can be scrutinized and understood.4. Integration with Legacy Systems

Healthcare organizations often rely on legacy IT systems that are incompatible with modern AI solutions, creating barriers to efficient deployment.

Solution:

To overcome this, AI solutions must be designed with interoperability in mind. Developers should adhere to industry standards like HL7 and FHIR to ensure seamless integration with existing systems. Collaborating with IT teams during implementation can minimize disruptions and improve adoption.

5. Ethical Concerns and Public Trust

Public skepticism regarding AI in healthcare stems from fears of job displacement, misuse of data, and dehumanized care.

Solution:

Healthcare organizations must actively engage with the public through transparent communication about AI’s benefits and limitations. Establishing AI ethical guidelines and ensuring that patients and clinicians are involved in decision-making can foster trust and acceptance.

Striking a Balance: Innovation vs. Regulation

Establishing a robust regulatory healthcare AI framework is critical to effectively integrating and overseeing emerging technologies. This requires a detailed and practical understanding of high-level concepts, paired with specific requirements for both systems and the individuals responsible for their operation. Organizations must provide clear and transparent information about the intended purpose of AI, the types of datasets used, and meaningful details about the logic and testing behind their systems.

Regulators play a vital role in overseeing AI systems, ensuring they identify and address missing elements in inputs, outputs, and overall functionality. This includes recognizing potential legal, ethical, or discriminatory gaps that may arise in AI deployment.

For AI developers, ethical guidelines must prioritize transparency, requiring systems to disclose their decision-making data sources and processes. Developers must address algorithmic biases proactively, emphasizing fairness and eliminating discriminatory outcomes. Integrating ethical considerations throughout the AI development cycle involves rigorous testing for bias, continuous monitoring, and establishing mechanisms to mitigate potential ethical issues.

AI Ethics Framework For Healthcare Orgs

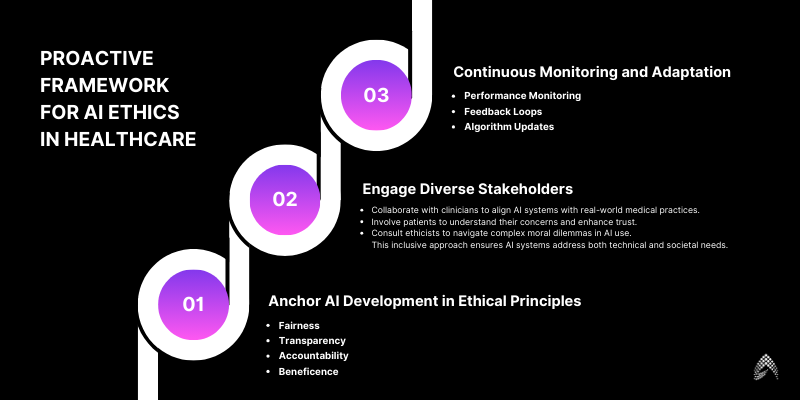

To move beyond compliance, healthcare organizations must adopt proactive AI frameworks that prioritize fairness, transparency, and accountability.

1. Anchor AI Development in Ethical Principles

Fairness

Conduct regular audits and source diverse datasets to mitigate bias.

Transparency

Use explainable AI models to ensure decision-making processes are accessible to all stakeholders.

Accountability

Assign clear ownership of AI decisions, ensuring responsibility for outcomes.

Beneficence

Prioritize patient welfare over cost-cutting or operational efficiency.

2. Engage Diverse Stakeholders

Collaborate with clinicians to align AI systems with real-world medical practices.

Involve patients to understand their concerns and enhance trust.

Consult ethicists to navigate complex moral dilemmas in AI use. This inclusive approach ensures AI systems address both technical and societal needs.

3. Continuous Monitoring and Adaptation

AI systems operate in dynamic environments, requiring ongoing oversight, such as:

Performance Monitoring

Regularly assess system outputs to identify unintended consequences.

Feedback Loops

Establish mechanisms for users to report issues and suggest improvements.

Algorithm Updates

Continuously refine systems to reflect new insights and mitigate risks.

By embedding these principles into the development and deployment process, healthcare organizations can ensure that their AI systems serve patients ethically and effectively.

AI Framework Example in Health Care:

American Medical Association (AMA) Framework for AI in Health Care

Overview:

The AMA has established principles to guide the development and implementation of AI, referred to as “augmented intelligence,” in healthcare. These principles emphasize the importance of ethics, evidence, and equity in AI applications.Key Principles:

Ethics

AI systems should be designed and used in ways that align with medical ethics, prioritizing patient autonomy, privacy, and shared decision-making.

Evidence

The efficacy and safety of AI tools must be validated through rigorous clinical evidence before widespread adoption.

Equity

AI should promote health equity, ensuring that all patient populations benefit from technological advancements without exacerbating existing disparities.

Implementation Guidance:

Engage in multidisciplinary collaboration, including input from clinicians, patients, and ethicists, during AI development.

The AMA’s framework serves as a cornerstone for advocating national policies that ensure AI’s ethical, equitable, and responsible integration into healthcare.

Conclusion

Compliance is important for healthcare AI, but it is not enough. Organizations need to go beyond just following rules and adopt a strong ethical framework. This framework should focus on fairness, transparency, accountability, and patient welfare while addressing the challenges of using AI in healthcare.

By combining compliance and ethics, healthcare organizations can reduce risks and fully utilize AI to improve patient care.

Are you ready to take your healthcare AI systems beyond basic compliance? Contact us today to learn how our solutions can help you lead with ethics and effectiveness. Amzur has been a trusted technology partner for many thriving businesses in the USA for the past 2 decades. If you are looking for an AI consulting and implementation company in the USA, get in touch with our AI expert team today.

Check out how we helped our healthcare client with automated claim processing and drug administration.

Director ATG & AI Practice