Role of Docker Containers In CI/CD Pipeline Security and Consistency

Introduction

As software development evolves, Continuous Integration and Continuous Deployment (CI/CD) pipelines have become the backbone of efficient, automated workflows. However, with speed comes risk. Securing your CI/CD pipeline—especially in containerized environments using Docker and Kubernetes—is more critical than ever. Data breaches, supply chain attacks, and unprotected pipelines can result in vulnerabilities that undermine the security of your software delivery processes. As the digital landscape becomes increasingly sophisticated, enterprises must implement security best practices to protect their pipelines at every stage.

In this article, we’ll delve into the essential security strategies for each phase of your CI/CD pipeline, with a special focus on securing source code management, build servers, testing tools, deployment tools, and the growing concerns around supply chain security. We’ll also discuss data encryption throughout the pipeline, container security practices, and tools to help you automate vulnerability scans to ensure secure and robust pipelines.

Strategies to Secure CI/CD Pipeline:

1. Securing the Source Code Management (SCM)

The first stage in the CI/CD pipeline starts with Source Code Management (SCM). If compromised, attackers can easily introduce malicious code early in the pipeline, affecting all downstream processes.

Key Best Practices for SCM Security:

- Access Control: Ensure that only authorized users and machines can access your source code repositories. Implement multi-factor authentication (MFA) and use SSH keys for secure access.

- Branch Protection: Enforce policies that require code reviews before merging any pull requests. Set up automatic checks to prevent direct pushes to production branches.

- Code Signing: Use code signing certificates to verify the integrity of the source code. This ensures the authenticity of the changes and guarantees the code hasn’t been tampered with.

- Encryption of Source Code: Use encryption to protect source code at rest in your repositories, especially when stored in cloud-based SCM services like GitHub or GitLab.

- Automated Secrets Detection: Tools like TruffleHog and GitGuardian can help automatically scan your repositories for sensitive information (e.g., passwords, API keys) before they are committed to the repository.

Use Case: A large-scale e-commerce platform can ensure that unauthorized third-party developers or insiders cannot modify the code repository by implementing strict branch protection and automated scanning tools for exposed credentials.

2. Securing the Build Servers

The build server is where code is compiled and tested, making it a prime target for attackers. If compromised, it can lead to the introduction of backdoors into the application, making it essential to secure this layer of the pipeline.

Best Practices for Securing Build Servers:

- Isolate Build Environments: Run builds in isolated environments (e.g., virtual machines or containers) to limit the impact of a potential breach.

- Image Scanning: Use tools like Anchore or Clair to scan container images before they are used in the build process.

- Continuous Monitoring: Implement monitoring and logging tools to track any unauthorized access or changes made during the build process. Use logging solutions like ELK stack to analyze real-time activity.

- Automate Dependency Scanning: Integrate tools like Snyk or SonarQube into the build process to automatically identify vulnerabilities in code dependencies.

- Secure Build Agents: Always keep build agents updated with the latest patches, and ensure they are isolated from production systems.

3. Securing Testing Tools

After building the application, the next stage is testing. However, vulnerabilities can still be introduced during testing if proper controls are not in place.

Key Security Practices for Testing Tools:

- Security Testing Automation: Integrate security testing into your CI/CD pipeline using tools like OWASP ZAP and Burp Suite to automate penetration testing and vulnerability scanning.

- Testing with Least Privilege: Run tests with the least privileges necessary to avoid escalation of rights within the testing environment.

- Secure Test Data: Mask or anonymize any sensitive data used in testing environments to prevent exposure of real-world data during the testing phase.

- Integration of Security Testing at Multiple Phases: Implement testing at various stages: unit tests, integration tests, and end-to-end tests.

- Automated Code Review: Use tools like SonarQube for continuous code quality and security review, catching bugs and vulnerabilities early.

4. Securing Deployment Tools

The final step in the CI/CD pipeline is deployment. Deployment systems can be highly vulnerable to compromise if proper security controls aren’t in place.

Deployment Security Best Practices:

- Encrypt Communication Channels: Use TLS to ensure that the communication between deployment systems and cloud environments is encrypted.

- Automate Rollbacks: Implement an automated rollback mechanism in case a security breach or failure occurs during deployment.

- Use Immutable Infrastructure: Adopt immutable infrastructure techniques (via Docker or Kubernetes) so that you can replace compromised systems rather than patching them.

- Secure CI/CD Pipelines: Use tools like HashiCorp Vault to securely manage and distribute secrets (e.g., API keys) used during deployment processes.

- Ensure Auditability: Implement logging and auditing for all deployment actions, allowing you to track who deployed what, when, and where.

5. Securing Containers (Docker & Kubernetes)

Containers offer great flexibility but require careful management to avoid security risks.

Container Security Best Practices:

- Use Trusted Images: Always use official, trusted images from Docker Hub or container registries and scan them regularly for vulnerabilities.

- Limit Container Privileges: Run containers with the least privileged user and avoid giving containers unnecessary root access.

- Runtime Security: Use tools like Aqua Security or Falco to monitor containers during runtime for unusual behavior, like privilege escalation or network traffic anomalies.

- Implement Network Segmentation: Isolate containers into separate networks to prevent unauthorized lateral movement within your infrastructure.

- Regular Patch Management: Keep the Docker engine and Kubernetes platform up to date with the latest patches to mitigate any known vulnerabilities.

6. Supply Chain Security

In today’s landscape, supply chain vulnerabilities—particularly in third-party dependencies and open-source components—are among the leading causes of breaches.

Supply Chain Security Best Practices:

- Scan for Vulnerabilities in Dependencies: Use tools like Snyk and Sonatype Nexus to monitor for vulnerabilities in third-party libraries and dependencies continuously.

- Implement Dependency Locking: Lock versions of dependencies to known secure versions to mitigate risks from untrusted updates.

- Use Trusted Sources: Always pull dependencies from trusted, official repositories.

- Verify Artifact Integrity: Ensure that artifacts are signed, and that integrity checks are in place to verify their authenticity before they are used in the pipeline.

- Zero-Trust Approach: Trust no external tools or dependencies by default. Regularly audit and validate all third-party services.

Let’s check how Dockers can help build a robust and secured CI/CD pipeline.

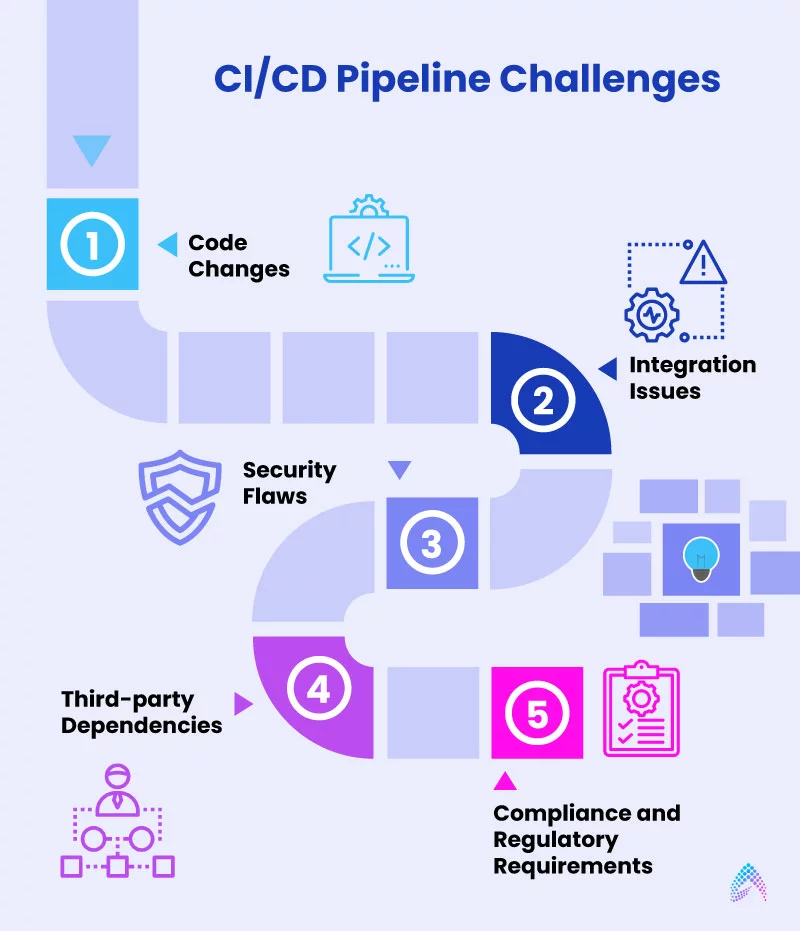

CI/CD Pipeline Challenges:

Continuous Integration and Continuous Deployment (CI/CD) pipelines offer numerous benefits, including faster development, automation, testing, and deployment of new features. On the other hand, it also comes with challenges and security concerns.

Here are five common challenges and concerns with CI/CD pipelines:

1. Code Changes:

As CI/CD requires frequent code changes, maintaining the security of the development process is challenging. Development and testing teams need to ensure that every code change is thoroughly tested and verified before being integrated into the deployment pipeline.

If there are any compatibility issues with the existing code, they will introduce new vulnerabilities or security flaws.

2. Integration Issues:

It is one of the common issues with CI/CD pipelines as it involves different software components’ integration into a system. Since these components may have different security requirements, integration issues can lead to vulnerabilities and process disruptions.

3. Security Flaws:

Security flaws can happen at any stage if developers accidentally introduce vulnerabilities into the code or the infrastructure is not secure. These vulnerabilities might become gateways for hackers to access systems and sensitive data.

4. Third-party Dependencies:

CI/CD pipelines often rely on third-party libraries and tools. Verifying the security of these dependencies, keeping them up to date, and ensuring they are flawless can be a significant concern.

5. Compliance and Regulatory Requirements:

Different industries and organizations have specific compliance requirements. Ensuring that the CI/CD pipeline meets these standards, including data protection regulations like GDPR, adds complexity and security concerns to the process.

Addressing these challenges and security concerns requires a combination of best practices, security tools, and a proactive approach to secure CI/CD pipeline design and maintenance.

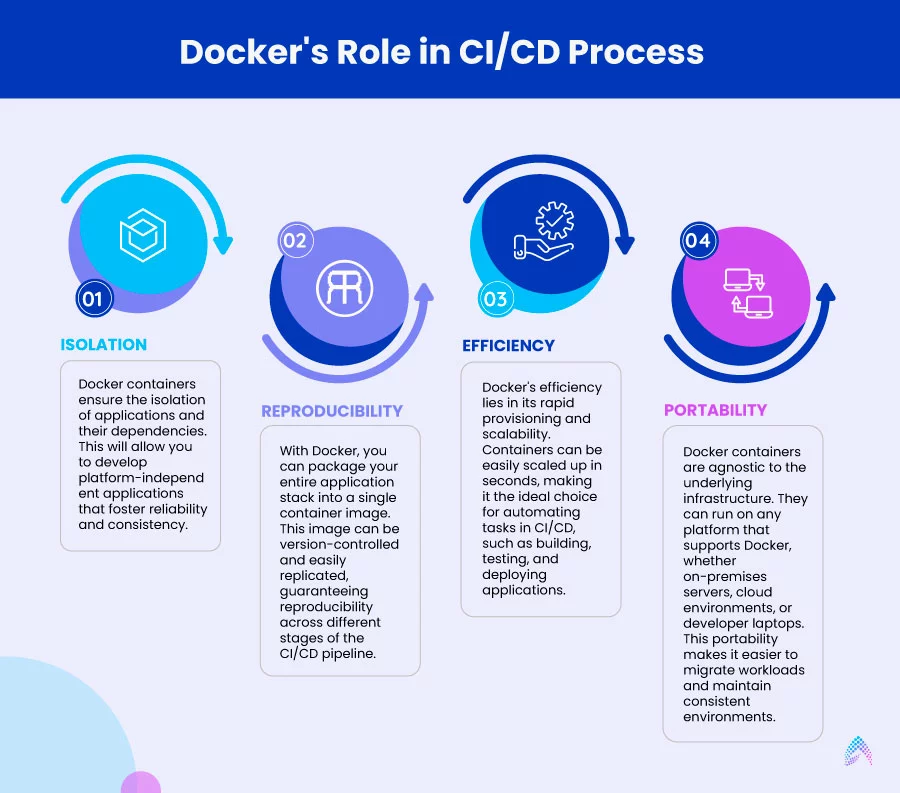

Dockers in CI/CD Pipeline:

Docker’s primary role in CI/CD workflow is to provide a consistent and portable environment for applications. It achieves this by encapsulating an application and all its dependencies, libraries, and configurations into a lightweight, standalone container. This further helps in developing, shipping, and deploying applications quickly and securely.

With Docker, your development environment will be the same as your production environment, and you can manage your infrastructure as you manage your applications. Dockers primarily alleviate the problem of “it’s broken on my machine!”

Here is how Docker plays a pivotal role in the CI/CD process:

1. Isolation:

Docker containers ensure the isolation of applications and their dependencies. This will allow you to develop platform-independent applications that foster reliability and consistency.

2. Reproducibility

With Docker, you can package your entire application stack into a single container image. This image can be version-controlled and easily replicated, guaranteeing reproducibility across different stages of the CI/CD pipeline.

3. Efficiency:

Docker’s efficiency lies in its rapid provisioning and scalability. Containers can be easily scaled up in seconds, making it the ideal choice for automating tasks in CI/CD, such as building, testing, and deploying applications.

4. Portability

Docker containers are agnostic to the underlying infrastructure. They can run on any platform that supports Docker, whether on-premises servers, cloud environments, or developer laptops. This portability makes it easier to migrate workloads and maintain consistent environments.

Role of Dockers in CI/CD in Improving Consistency and Portability:

Docker is a game-changing technology that has revolutionized the way applications are built, tested, and deployed. Docker Containers are instrumental, especially in improving CI/CD pipeline consistency and portability.

1. Consistency through Containerization:

In today’s digital world, relying on traditional methods and developing applications for every platform is a time-consuming, resource-intensive, costly, and unproductive task.

On the other hand, Docker excels in creating a consistent environment for applications at every stage of the CI/CD pipeline. By encapsulating your applications and their dependencies into a lightweight, standalone container, Dockers ensure your applications run on every system and environment, eliminating platform-specific challenges.

With Docker Containers, developers can work in a controlled environment that mirrors the production system, guaranteeing that the application’s behavior remains uniform throughout its journey in the pipeline. This consistency minimizes unexpected issues and downtimes, early bug identification, and ultimately leads to a reliable software application.

2. Portability:

Dockers are known for their remarkable portability feature. Docker Containers are platform-agnostic, running seamlessly across various infrastructures. Whether it’s on-premises, public or private clouds, or developer laptops, you can witness the same experience.

This portability is a game-changer for Startups and SMBs with limited resources and budgets yet aspiring to build cutting-edge solutions. You can effortlessly migrate across different environments without disruption.

Docker Containers simplify the process of setting up and maintaining development, testing, and production environments. This further empowers development and operations teams to collaborate more effectively, accelerate software delivery, and ensure applications run seamlessly across environments.

Securing CI/CD Pipeline with Immutable Infrastructure:

What is immutable infrastructure:

Immutable infrastructure is a strategy where infrastructure components are created in a specific state and never changed once deployed. Instead of patching and updating existing components, immutable infrastructure promotes the replacement of containers with fresh, updated versions. This approach ensures the infrastructure remains consistent, predictable, and resistant to configuration drift.

Advantages of adopting immutable infrastructure in CI/CD:

1. Reducing attack surface and simplifying updates:

One of the remarkable advantages of immutable infrastructure is it is less prone to errors and security vulnerabilities. Since changes are made in a controlled manner, the chances of vulnerabilities or unauthorized changes are minimized. Moreover, updates are simplified, as deploying a new version is less complex than managing changes within existing components.

This is especially important for Docker containers when dealing with sensitive applications such as web servers and databases.

2. Enhancing Security:

Immutable infrastructure significantly enhances the security posture of the pipeline. Consistency across environments ensures that security controls and configurations are uniformly applied, minimizes vulnerabilities, and makes it easier to track changes and assess security risks.

Here are the 4 ways you can enhance security:

- Keep all software and libraries up to date with security patches.

- Implement image scanning and validation as part of your CI/CD pipeline to identify and mitigate security vulnerabilities.

- Implement role-based access control (RBAC) to restrict access to critical components and sensitive data.

- SSH key-based authentication when working with Linux servers.

3. Automation and Scaling:

Immutable infrastructure is inherently automation-friendly. This automation ensures rapid, consistent, and error-free resource management, supporting the dynamic demands of modern applications. Horizontal scaling of resources by adding more containers becomes an effortless task.

Three ways to automate and scale the immutable infrastructure:

- Docker Swarm: A powerful tool that allows you to scale Docker by creating a cluster of Docker hosts. With this tool, you can effectively manage multiple microservers from a single master server.

- SaltStack: It is another configuration management tool that can help you control a number of microservers from a single master server.

- Jenkins: It is an open-source automation tool that allows you to create pipelines for building, testing, and deploying your applications.

4. Zero-downtime Deployments:

Immutable infrastructure plays a crucial role in enabling zero-downtime deployments by allowing new versions to be deployed alongside existing ones, seamlessly transitioning traffic to the updated components without disruptions.

By embracing Docker’s containerization, immutable infrastructure offers the resilience and security necessary for modern CI/CD pipelines. It ensures consistency, simplifies updates, and enables the rapid provisioning of resources.

Here, A Blue-Green deployment is a relatively simple way to achieve zero downtime deployments by creating a new, separate environment for the new version being deployed and switching traffic into it.

Immutable Infrastructure Use Case: E-commerce Website Deployment

Imagine you are deploying an e-commerce website using a traditional setup. In this, you might manually configure servers and update them as needed. This can lead to various challenges, including configuration drift, security vulnerabilities, and unforeseen downtimes.

#1: With immutable infrastructure, you create a standardized server or container image that includes the web application, web server, and all dependencies. This image is well-tested and verified for security.

#2: Each time you need to make a change to the website, you don’t modify the existing servers. Instead, you create a new instance from the immutable image, with the changes already incorporated. This ensures consistency and avoids configuration challenges.

#3: Once the new instance is running and tested, you swap the incoming traffic to the new instance while retiring the old one. If it encounters any issues, you can easily roll back to the previous version. Therefore, you can retain functionality without any disruptions.

#4: You can create more instances from the same immutable image to handle increased traffic during peak times, ensuring that every new server is identical and ready to handle the traffic.

In the above use case, you ensure that the website remains secure, consistent, scalable, and easily maintainable. Any change is managed by creating a new immutable instance, reducing the risk of errors, simplifying troubleshooting, and enhancing the overall reliability of your e-commerce platform.

How to incorporate immutable infrastructure with Docker:

Here are the 4 steps to consider to incorporate immutable infrastructure.

1. Automate Everything:

By using tools like Docker Compose and Kubernetes, automate the provisioning and configuration of Docker containers. The Container Orchestration tools help in rolling out new versions while ensuring high availability and zero downtime.

2. Continuous Integration:

By regularly building and testing Docker images in the CI Pipeline using version control, you can maintain a history of images, ensuring traceability and repeatability.

3. Immutable Image Tags:

We use immutable tags for Docker images, such as version numbers to avoid accidental updates to existing containers. In your CI/CD pipeline, we ensure that each change results in a new Docker image build with a unique tag.

4. Container Orchestration:

Using container orchestration platforms such as Kubernetes, you can deploy and manage your containers. Kubernetes supports features such as rolling updates and blue-green deployments, which make it easy to deploy and update applications safely and reliably.

Conclusion:

Securing your CI/CD pipeline is no longer optional—it’s essential. Every stage of the pipeline, from source code management to deployment, has its own unique security challenges. By following these best practices and utilizing the right security tools, businesses can safeguard against potential vulnerabilities, reduce the risk of data breaches, and improve the integrity of their software development lifecycle.

At Amzur, we understand the complexities of securing CI/CD pipelines, especially when containers and cloud-native technologies are involved. Our team of experts can help you implement these security best practices and leverage the most advanced tools for secure, efficient, and scalable CI/CD pipelines. Let us help you safeguard your systems while you focus on driving innovation.

Director – Global Delivery